Despite my diverse engagements with different Microsoft stacks ranging from Power Platform to Azure AI, I have always been a .NET (C#) guy and because of that I have a special place in my heart for all things XAML and ML.NET ❤

As we’ve seen a lot of improvements and contributions in AI / ML spectrum from Microsoft and the community, I thought to share something super exciting and new in ML.NET. Nowadays, tools & frameworks have improved so much that I thought to explore and learn new ways to solve more business problems rather than just focusing on Sentiment Analysis. Those fantastic developers and content creators, who are still working to address this problem (Sentiment Analysis) in many ways are awesome and I totally respect that. Anyway, today we’re going to talk about the latest offering by ML.NET Model Builder Preview and that is, Object Detection.

Don’t get me wrong. Even I love Sentiment Analysis use-case and that’s the reason I built SentimentAnalyzer last year but yeah, let’s not stick there!

Know ‘What’ Before ‘How’

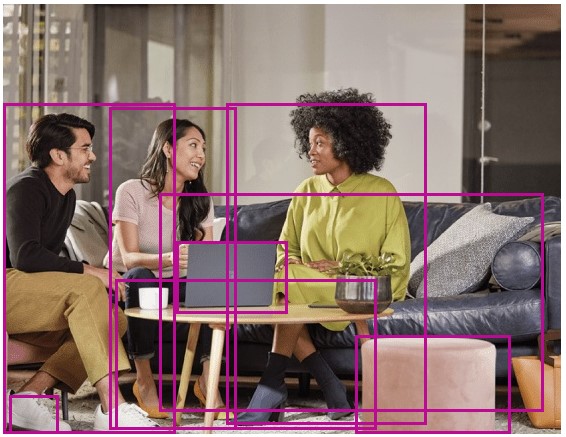

Object Detection is a common Computer Vision problem. It’s different than a simple image classification because it goes a little deeper, tries to locate and categorizes objects within the images.

|

|

| {Person} – Image Classification | {Footwear, Person, Laptop, Plant} – Object Detection |

|---|

There were numerous scenarios / cases available in ML.NET to solve many problems from Image Classification to Anomaly Detection to Text Classification but until last week, Object Detection wasn’t available and there’s a fair reason behind it.

Training of an object detection model requires A LOT of resources ⛽ in terms of compute (several hundreds of GPU hours) which may have more than million parameters with the labeled data. Therefore, the awesome team at ML.NET had to come with an option that should work fine for everyone. So, what they did is, instead of using the power of your machines, they gave you an ability to train your model using Azure ML, right from the Visual Studio experience!

But How…?

So for that, you first need to install the new Model Builder (Preview) extension from Visual Studio Marketplace. Once it’s downloaded, you just need to create a .NET Core (Console) app. Right click the project, go to Add and Click Machine Learning.

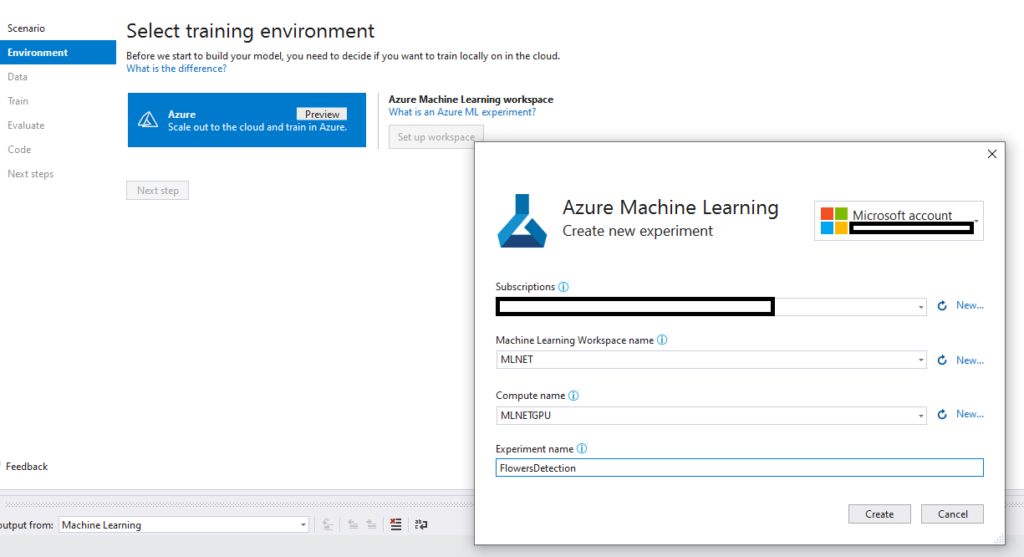

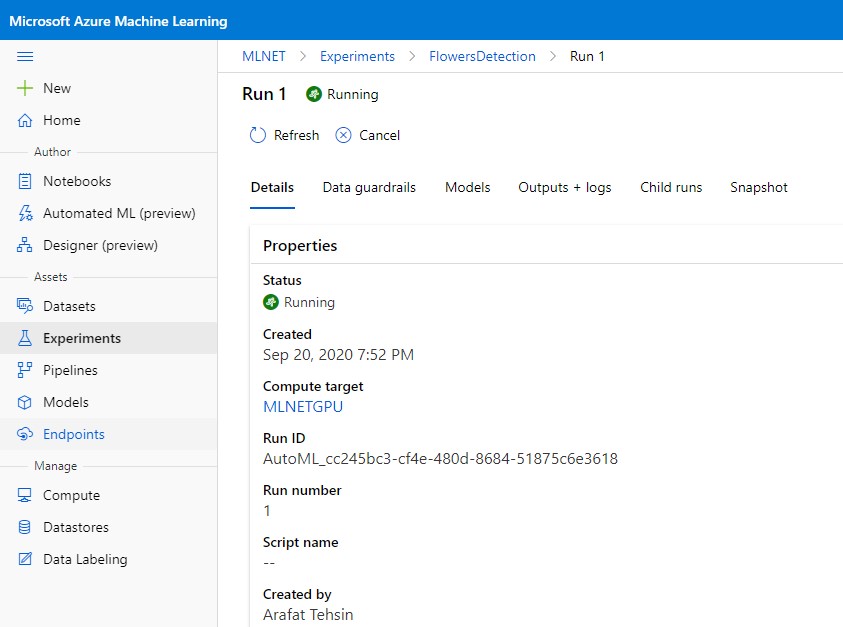

Once you’re there, you need to choose Object Detection scenario where you will be asked to setup your Azure Machine Learning workspace. Please note that you must have an Enterprise workspace. Basic did not work for me so I had to upgrade.

You can always create the Workspace, Compute and Experiment directly from Visual Studio experience and I can tell you that it has no issues at all. Once you’re done with creation, click on *Create* (it won’t create if it’s the experiment is already there, so don’t worry).

It’s all about your Data

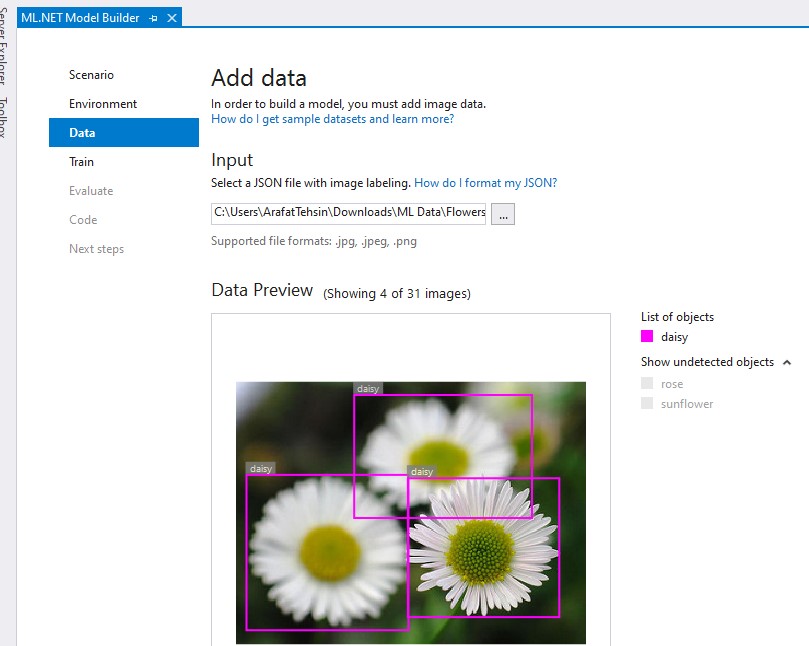

Next step is to add Data. You will be asked to add a JSON file with the image labeling. Before you wonder what’s that and how will you get that, just take a deep breath 💡

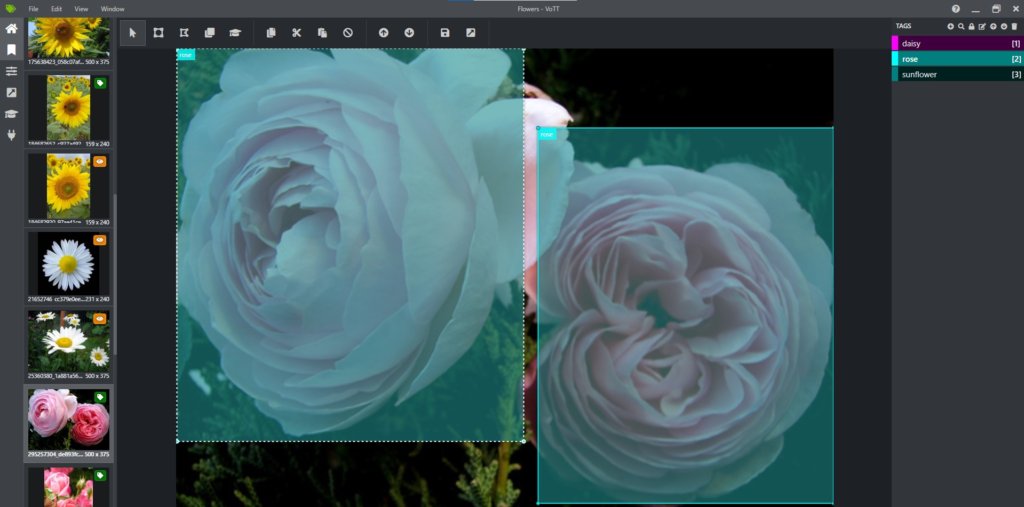

Microsoft Commercial Software Engineering team has done a GREAT job 💪 in building a tool that’d help you label your images with an easy effort. That tool is known as Visual Object Tagging Tool (VoTT). In this post, I am going to keep my scope very limited to Object Detection scenario so I will request you to go through this doc and hopefully you will be able to get enough knowledge to label your images. In case you find any issues, don’t forget to connect with me on Twitter or comment here with your specific question and I’ll be more than happy to help you!

Once you’re done with labeling your data (in my case it was just the images of Flowers, which is a very famous TensorFlow flower dataset) then you can export the project. The export will be a JSON file which you need to locate in your Data section.

Then comes your training part which will be done on Azure and you can monitor it in real time. Since it will take some good amount of time so instead of sitting back and relax, do something productive 👨💻

When I initially started exploring this, I got an issue which even amazed ML.NET Product team. Later on, I got to know that my Compute always need to be dedicated rather than of something Low priority or shared. So the team has raised another bug internally to correct the error message.

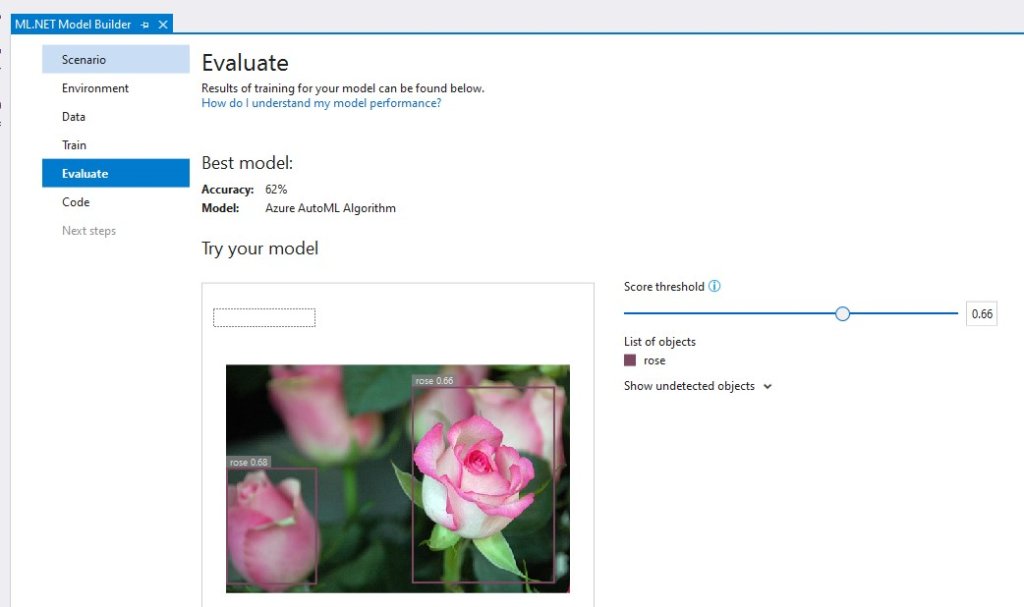

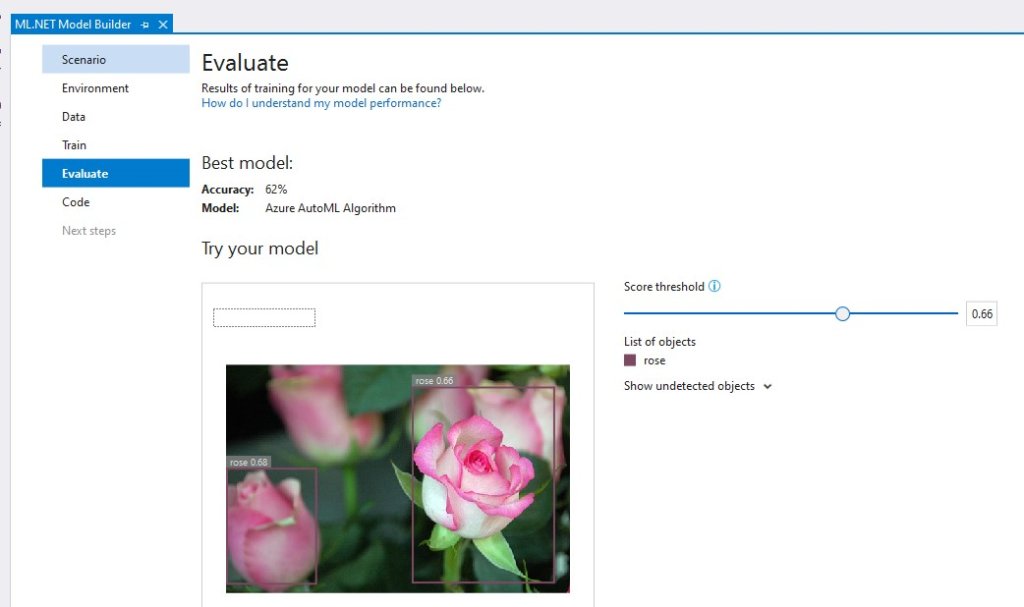

After it’s done, you will see an option to Evaluate your model. You can simply upload an image which was not a part of your training set to test the accuracy of your model. I just provided limited set of pictures that’s why my model’s accuracy is not as good as it could be.

Since this scenario (Object Detection) is in Preview that’s why I always get this error after completion of my training set, right before it downloads the ONNX model to my machine.

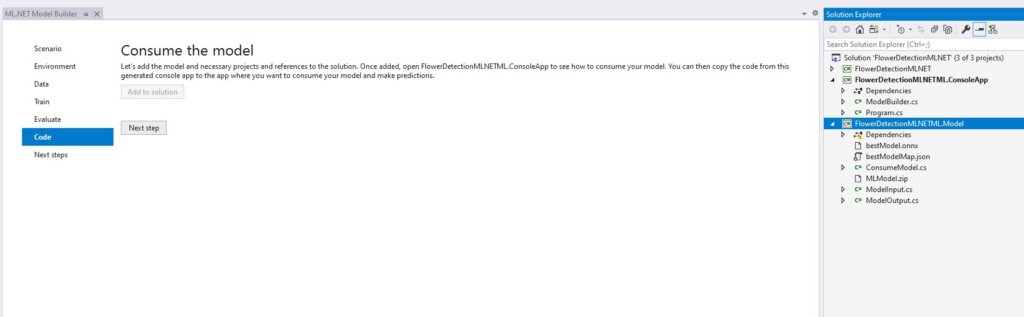

Then if you’re satisfied with the result, you can always generate the code to be used in your applications later on. Furthermore, you can also check how it’s providing you the results (similar to other ML.NET Model Builder scenarios).

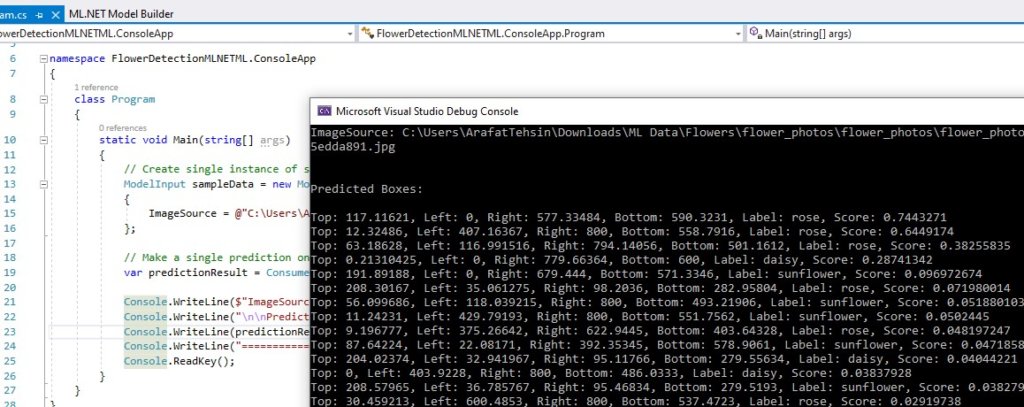

You can always test the code by providing a different image too!

Just a glimpse for friends who want to see the complexity of this model;

Lastly, I was planning to make a better use-case than this to show you through my UWP app but I figured out that the ONNX model which is generated is not compatible with the Windows.AI.MachineLearning library hence I’ve opened up a GitHub issue and will see how it will go.

Until next time

Arafat

Hello Arafat. Excellent explanation. I am trying to us object detection but I have a erro in json file. How do you export it from VoTT? Thanks!

Hi Regis. Thank you so much for your appreciation. May I know what is the error you’re getting?

Were you able to tag your items, define source and targets?

Hello, thank you for article. I am also getting an error when loading a json file in ML.NET Model Builder. “Cant parse json, please make sure the input json file has a valid format”. I was creating a file in VOTT. Can you attach an example of your json file?

Hi Alexander. Happy to share my JSON. Can you please connect me with on Twitter, LinkedIn or any platform of your choice.